Table of Contents

Will using HDR in games lower your FPS? As nice as the prettier visuals offered by HDR can be, many gamers prefer to make visual compromises if the exchange grants them a more stable gaming experience through higher framerates. So, will using HDR in games lower your FPS? Let’s find out!

What Is HDR?

First, let’s take a moment to define “HDR”. HDR just means “high dynamic range”, and is primarily a term used for photography in the world outside of video games. With HDR photography, you should be able to capture the nuances of detail in poorly-lit scenes like those photographed at night.

The catch of HDR photography is that it’s actually something of an illusion: you need multiple photos taken at different “exposure” levels, and then stitch the final result together into a single image that brings out the combined detail from both the darkest and brightest versions of the same scene.

For a more detailed look at HDR in photography and the (beautiful) results, I recommend taking a look at the Digital Trends article on the same topic.

How HDR Works In Games

So, how does this principle of HDR photography carry over to gaming? You obviously can’t render the same frame simultaneously for the effect. Even if you somehow could do that, it’d be a horrible waste of computational resources.

Fortunately, the nature of games already make them pretty well-suited to HDR functionality. Since the luminance and color of every in-game character and object is tightly-controlled in-engine, the real limiting factor is less about the game and more about the display that you happen to be playing the game on.

HDR in gaming is reliant on an HDR-compliant display. Display HDR technology is also based around capturing high dynamic range (brightest brights, darkest darks), but instead does it by breaking down each frame of each image into different “zones” of lighting. By controlling lighting “zones”, or even individual pixels on an OLED or VA panel, display HDR tech can effectively duplicate the benefits of multiple-exposure HDR photography in real time!

However, not every game is going to support HDR outside of the box. Display HDR does require at least some degree of in-software support to function to its fullest potential. For games, this means that you won’t be able to enjoy HDR unless the devs have consciously developed around the feature’s lighting calculations or you use a workaround.

In the world of console gaming, HDR is probably at its current best. This is because HDR on console gaming has been a mainstream-supported feature since the last console generation! For quite a while, in fact, even HDR support on the weakest mainstream console, the Xbox One S, was better than support on Windows. It still sort of is, but Windows actually did add an Auto HDR feature, which is worth talking about.

A Note on Auto HDR in Windows

So, what is Auto HDR?

Basically, Auto HDR refers to the ability for Windows 11 (and 10, at least in an Insider build) to take a non-HDR game and play it back with an HDR-compliant signal.

With the proper “Auto HDR” feature, this means all the obvious in-render info that can be turned into an HDR “heatmap” for purposes of brightness control is done so in real-time, with minimal computational cost. It isn’t the ideal solution, which is properly-coded HDR in the game itself with calibration settings that can be tuned to that specific HDR display, but it is still quite effective.

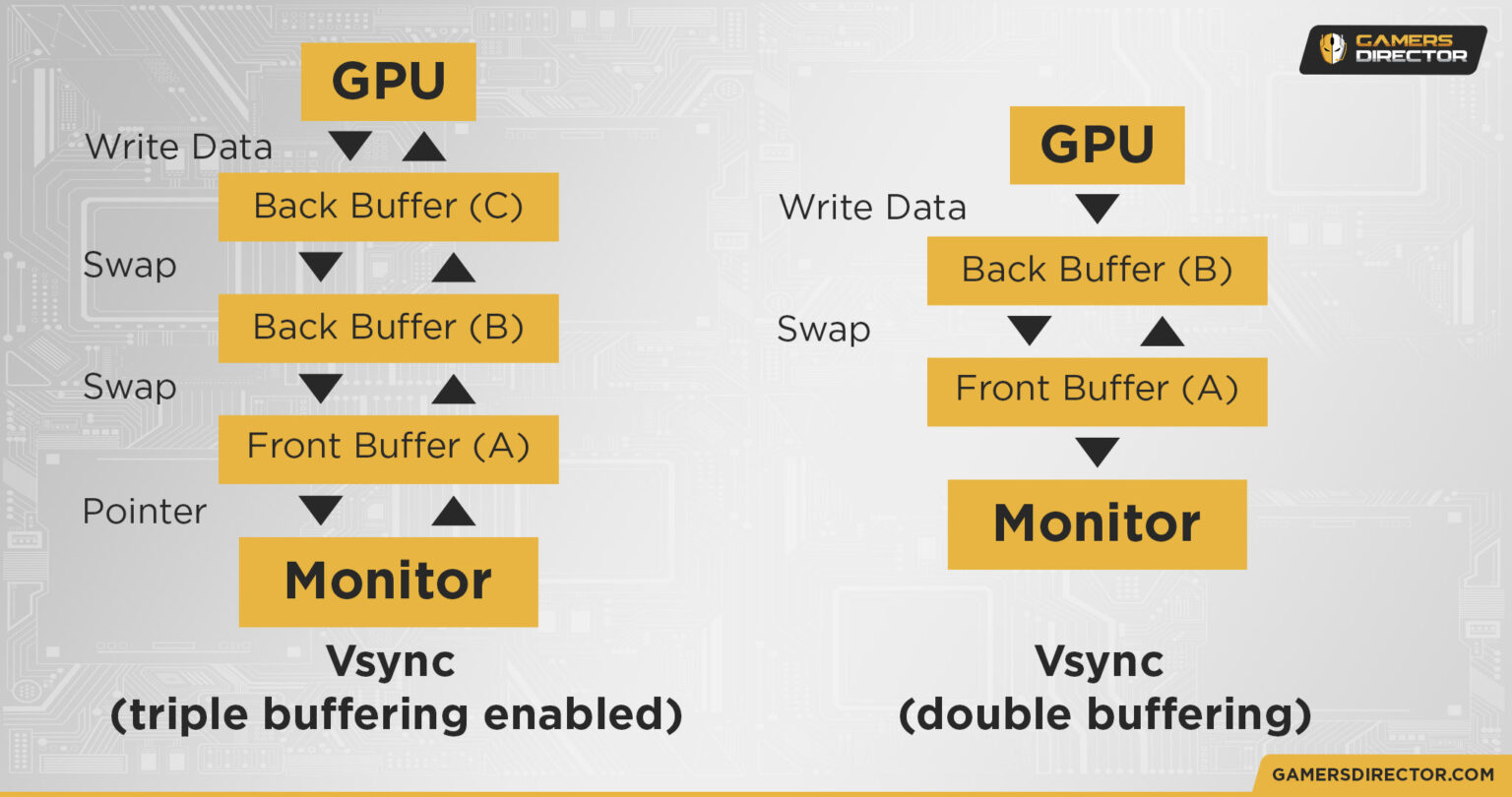

In Windows 10, it functions a little differently. For one, they removed the full Auto HDR implementation because…well, screw you if you don’t pay up for Windows 11 or have a compatible motherboard, I guess. However, you can still “force” HDR in Windows 10 if the game you’re playing is in borderless fullscreen or a window, since enabling HDR in Windows 10 applies basic HDR to the entire DWM (Desktop Window Manager) that handles your desktop rendering unless you are in Exclusive Fullscreen. The main downside of the DWM from a gaming perspective is that it forces Triple-Buffered V-Sync.

By default, all games run on Windows 10 will run in a borderless fullscreen window unless you go out of your way to check “Disable Fullscreen Optimizations” in the .exe’s Compatibility settings. I like doing this to minimize input lag and get the DWM out of the picture in competitive titles, but I also find with Variable Refresh Rate and Hardware-accelerated GPU scheduling enabled in Windows’ Graphics settings, forcing Exclusive Fullscreen isn’t quite as necessary as it used to be. Completely untethered framerate is less important than a stable and consistent framerate, after all.

How HDR Impacts Gaming Performance

It…basically doesn’t.

Yeah, you heard me right. Remember how I mentioned earlier that most of the stuff HDR needs to function is already in your games? That isn’t just games that are Made to support HDR, it’s games in general. Video games have always been pursuing photorealistic, or at least “immersive”, graphics, and lighting has always been a fundamental part of that. Even retro pixel art games have ways of simulating things like “Global Illumination” by changing the lighting of different rooms, objects, and lights within a game.

The recent push toward real-time ray-tracing and high-end HDR displays is just the latest stretch on the road, graphically-speaking. While these technologies help us cover those last miles on the way to convincing photorealism in video games, they are not what make a video game immersive. Art direction and skilled labor is what makes a video game immersive, not graphics technology in a vacuum.

How HDR Might Negatively Impact Your Gaming Experience

So, HDR doesn’t lower your in-game FPS, though some high-end graphical settings that improve upon it (like real-time ray-tracing) absolutely can. Why then, is there a concern around HDR performance in games?

If I had to guess, it’s probably down to the fidelity of the HDR effect being used by your display. Let’s take a moment to talk about DisplayHDR— the standard, not the concept.

The lowest grade of DisplayHDR is DisplayHDR 400, which…almost isn’t HDR. It essentially refers to an SDR display with support for an HDR signal, but without the dedicated “zones” enabled by a proper HDR implementation. So whenever HDR needs to make big color shifts to the image, it more-or-less means the brightness is going to be dynamically adjusting to whatever content is on your screen at the time.

When the HDR effect isn’t localized per-zone, it looks a little…different. Make no mistake: enabling HDR on a DisplayHDR 400 display can still look quite nice! I personally avoided it on my LG 27GL850-B because I preferred its image quality as I calibrated it in SDR. However, in SDR, I’m also prone to just…minimizing the grays in an image, not necessarily maintaining peak accuracy.

With time, though, I couldn’t help but notice that my monitor’s colors actually did pop more with HDR enabled, even just in the Windows DWM. The main thing I disliked about enabling HDR on a “weak” display was how it seemed to desaturate the image by exposing more grays, but once I calibrated around that…suddenly I could see why people like HDR so much.

There is one downside that cannot be ignored with a DisplayHDR 400 display, though. The fact is, when the entire screen’s brightness is constantly changing in accordance with what’s onscreen, the effect can end up causing a flickering effect.

A backlight flickering effect in and of itself isn’t too bad— in fact it reminds me of gaming on a CRT display back in the day— but it’s more noticeable in some titles than others. It’s especially more noticeable in third-person action games that don’t support HDR, even 2D ones, with the camera drawn back from the player character. In a first-person shooter, though, DisplayHDR 400 pretty much works just fine, since your in-game “viewpoint” will always be limited to the room your character is standing in.

Me personally? I don’t mind enough to disable it. My monitor is even better with HDR enabled now that I’ve coped with the existence of the color gray. But if you notice distracting backlight flickering in a certain title that doesn’t support HDR, feel free to disable fullscreen optimizations in the .exe so you don’t have to deal with the DWM. The more things change, the more things stay the same.

Parting Words

And that’s it!

Will using HDR in games lower your FPS? The definitive answer is No, but if your HDR sucks, it can cause more noticeable flickering when you’re playing unsupported titles, which can definitely suck. Overall, the enjoyment you’ll get out of HDR will always be limited by both your display and your own willingness to tweak the experience. On consoles, you don’t even need to think about it. But on PC, you can always choose to make the most of it, should you please, even if it’s not a real HDR game.

None of it’s real HDR. It’s video games.